Object Fusion Tracker

| Related documentations |

|---|

| Visual SLAM Tool |

| Tracker Coordinate System in Unity |

| Visual SLAM Learning Guide |

The Object Fusion Tracker loads the map file and renders a 3D object on it. After target recognition and initial poses are acquired through the MAXST SDK, use Nreal SLAM for tracking.

The biggest difference from the existing Object Tracker is that the existing Object Tracker tracks through the frame input from the RGB camera. Due to the nature of RGB cameras, tracking will be lost if the target deviates from the camera frame or if there are few feature points. Object Fusion Tracker, on the other hand, tracks through the Nreal SLAM, which allows the target to deviate from the camera frame or keep the feature point at least without tracking, due to the nature of learning the environment in which the current target lies.

Please refer Visual SLAM Learning Guide to create a map more precisely while scanning 3D space.

※ To use the Nreal SLAM, you must enter the actual size. (See Start / Stop Tracker)

Make Object Tracker Scene

Set Map

Add / Replace Map

Start / Stop Tracker

Use Tracking Information

Make Object Tracker Scene

Install MAXST AR SDK For Unity, MAXSTARSDK_FOR_NRSDK, and NRSDKForUnity_Release.

Create a new scene.

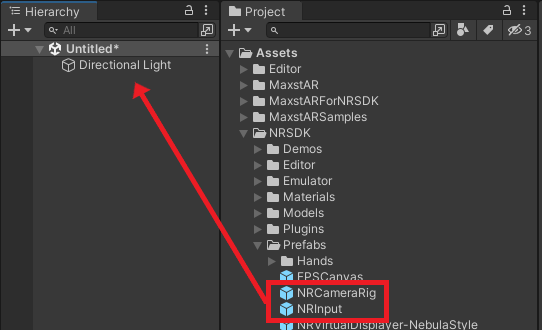

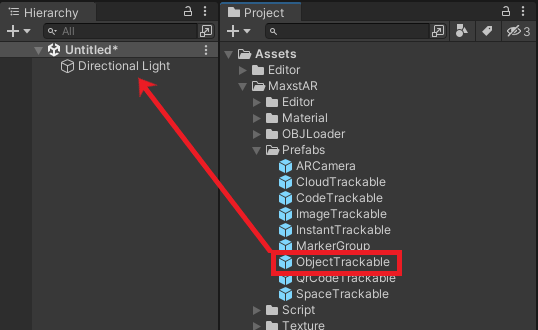

Delete the Main Camera that exists by default, then go to Assets > NRSDK > Prefabs > NRCameraRig, NRInput, Assets > MaxstAR > Prefabs >ObjectTrackable to your scene.

※ In the Project window, you need to add the license key to Assets > Resources > MaxstAR > Configuration.

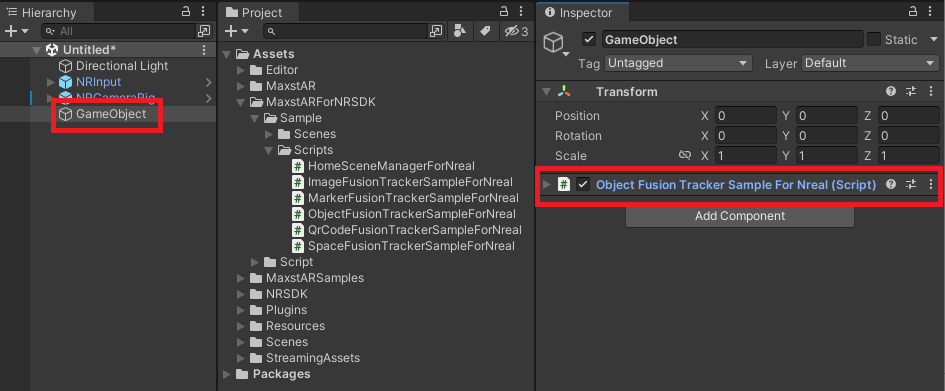

In the GameObject tab at the top, create an empty object and add the Assets > MaxstARSamples > Scripts >ObjectTrackerSampleForNreal script as a component.

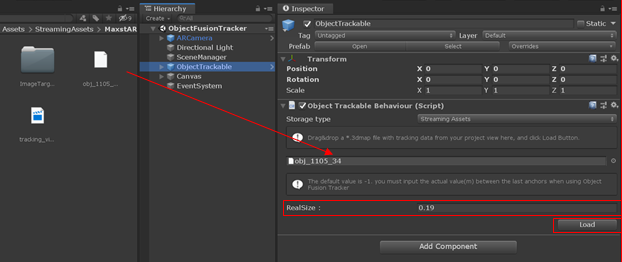

Place the map file created by Visual SLAM scene in Assets > StreamingAssets > MaxstAR, and drag map file to Inspector of ObjectTrackable to set map file. After entering the measured distance of the last two pins, Press the Load button to set the map in the map viewer described later.

※ If the map file is not placed under the StreamingAssets folder, the map file is not recognized.

After the map file is loaded, you can see pins with which the trained object is overlaid in Scene View and Game View. You can check some pins in the red rectangles in the below figure.

In MapViewer's Inspector, 'Show Mesh' option is checked as default. Choose a keyframe where you can work easily with moving the slider bar.

※ MapViewer

- If you set a map file in ObjectTrackable, MapViewer is automatically created as a child of ObjectTrackable.

- Keyframe Id: When creating a map, the camera looks at the target in various directions. At this time, a Keyframe is created for each direction that satisfies a certain condition. If you change Keyframe Id, Mesh / Image of the Keyframe is displayed in Game View. By positioning Cube in each Keyframe, you can position Cube more precisely.

- Show Mesh: If checked, the image displayed in the Game View changes to Mesh.

- Transparent: If checked, Mesh / Image becomes transparent.

Place a virtual object as a child of ObjectTrackable. 'maxst_cube' is placed as default.

The position of a pin means where to locate a virtual object while someone creates an object map via Visual SLAM Tool. Place a virtual object referring to pin positions.

Connect a webcam to PC and press 'play' button. Point the webcam at the target object and check whether your virtual objects are located places where you want.

Set Map

By callingaddTrackerData() to register the map file and callingloadTrackerData(), Space can be tracked. To set a map, refer to the following code.

>ObjectTrackerSampleForNreal.cs

private void AddTrackerData()

{

foreach (var trackable in objectTrackablesMap)

{

if (trackable.Value.TrackerDataFileName.Length == 0)

{

continue;

}

if (trackable.Value.StorageType == StorageType.AbsolutePath)

{

TrackerManager.GetInstance().AddTrackerData(trackable.Value.TrackerDataFileName);

}

else

{

if (Application.platform == RuntimePlatform.Android)

{

TrackerManager.GetInstance().AddTrackerData(trackable.Value.TrackerDataFileName, true);

}

else

{

TrackerManager.GetInstance().AddTrackerData(Application.streamingAssetsPath + "/" + trackable.Value.TrackerDataFileName);

}

}

}

TrackerManager.GetInstance().LoadTrackerData();

}

Add / Replace Map

- Create a map file refer to Visual SLAM Tool.

- Copy the received map file to the desired path.

- Set a map.

- If you have an existing map file, call AddTrackerData () and LoadTrackerData () after calling TrackerManager.GetInstance ().RemoveTrackerData().

Start / Stop Tracker

TrackerManager.getInstance().isFusionSupported()

This function checks whether or not your device supports Fusion. Return value is bool type. If true, it supports the device in use. If it is false, it does not support the device.

TrackerManager.getInstance().GetFusionTrackingState();

Pass the tracking status of the current Fusion.

The return value is an int of -1, which means that tracking isn't working properly, and 1 means that it's working properly.

After loading the map, refer to the following code to start / stop the tracker.

>ObjectTrackerSampleForNreal.cs

void Start()

{

...

CameraDevice.GetInstance().SetARCoreTexture();

TrackerManager.GetInstance().StartTracker(TrackerManager.TRACKER_TYPE_OBJECT_FUSION);

TrackerManager.GetInstance().AddTrackerData("ObjectTarget/obj_1010_7.3dmap", true);

TrackerManager.GetInstance().AddTrackerData("{\"object_fusion\":\"set_length\",\"object_name\":\"obj_1010_7\", \"length\":0.12}", true);

TrackerManager.GetInstance().LoadTrackerData();

...

}

void OnApplicationPause(bool pause)

{

...

TrackerManager.GetInstance().StopTracker();

...

}

void OnDestroy()

{

TrackerManager.GetInstance().StopTracker();

TrackerManager.GetInstance().DestroyTracker();

...

}

On the first call to addTrackerData (), the parameter must be passed the path of the 3dmap.

The addTrackerData () in the second call is used to determine the actual size of the 3dmap. (Unit: m)

Object_name is the file name of the 3dmap.

Length is the actual size of the two anchors you marked last when creating the 3dmap. (Please refer to Visual SLAM Tool.)

You must enter the actual size of the target. If you do not enter the correct actual size, the content will not be augmented properly.

It must be run in the following order: startTracker(), addTrackerData (), loadTrackerData().

Use Tracking Information

To use the Tracking information, refer to the following code.

>ObjectTrackerSampleForNreal.cs

void Update()

{

...

TrackingState state = TrackerManager.GetInstance().UpdateTrackingState();

TrackingResult trackingResult = state.GetTrackingResult();

for (int i = 0; i < trackingResult.GetCount(); i++)

{

Trackable trackable = trackingResult.GetTrackable(i);

objectTrackablesMap[trackable.GetName()].OnTrackSuccess(trackable.GetId(), trackable.GetName(), trackable.GetNRealPose());

}